News

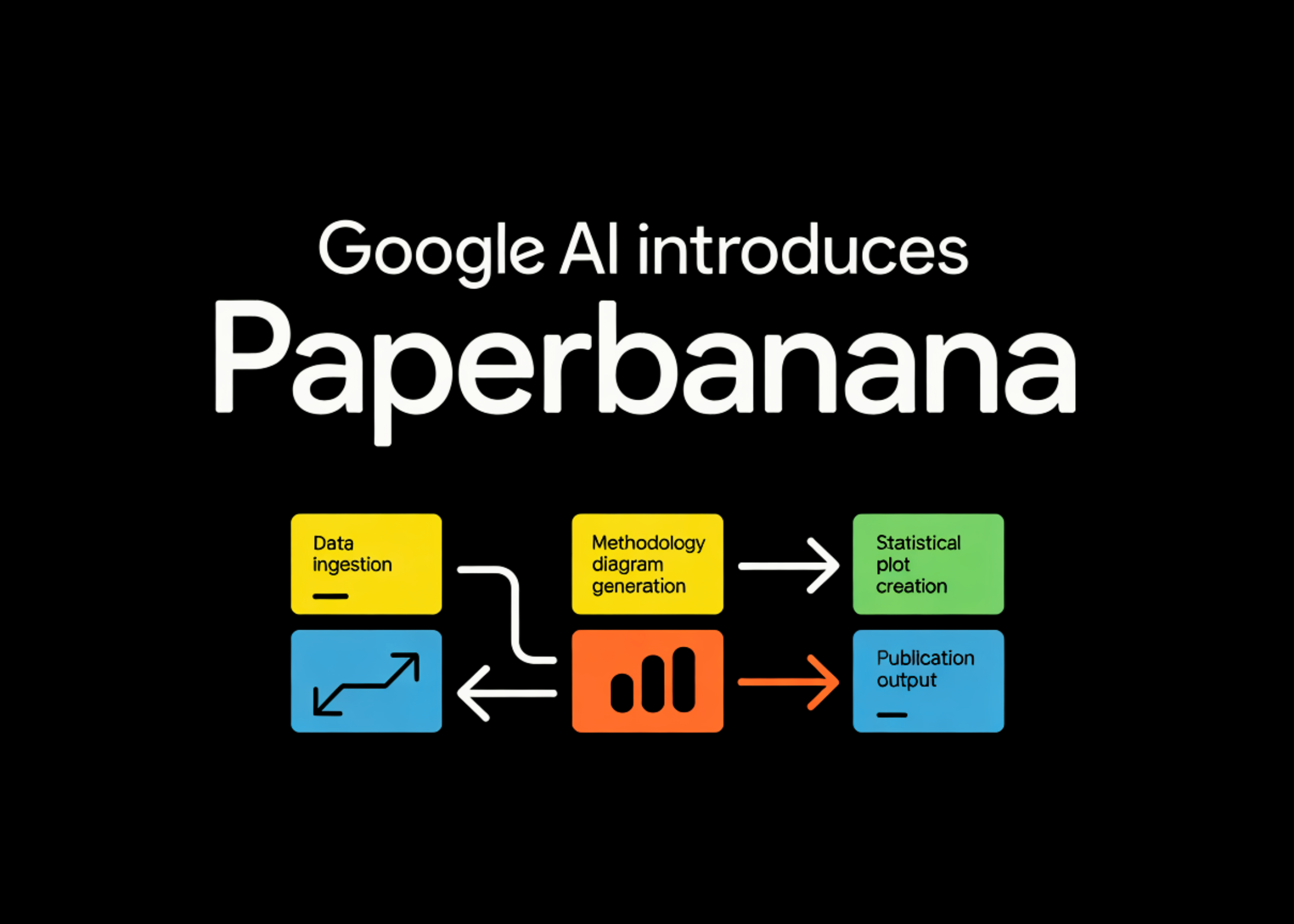

Google AI Introduces PaperBanana: An Agentic Framework that Automates Publication Ready Methodology Diagrams and Statistical Plots

3+ hour, 31+ min ago (151+ words) PaperBanana does not rely on a single prompt. It orchestrates a collaborative team of 5 agents to transform raw text into professional visuals. The research team introduced PaperBananaBench, a dataset of 292 test cases curated from actual NeurIPS 2025 publications. Using a VLM-as-a-Judge…...

3+ hour, 31+ min ago (151+ words) PaperBanana does not rely on a single prompt. It orchestrates a collaborative team of 5 agents to transform raw text into professional visuals. The research team introduced PaperBananaBench, a dataset of 292 test cases curated from actual NeurIPS 2025 publications. Using a VLM-as-a-Judge…...

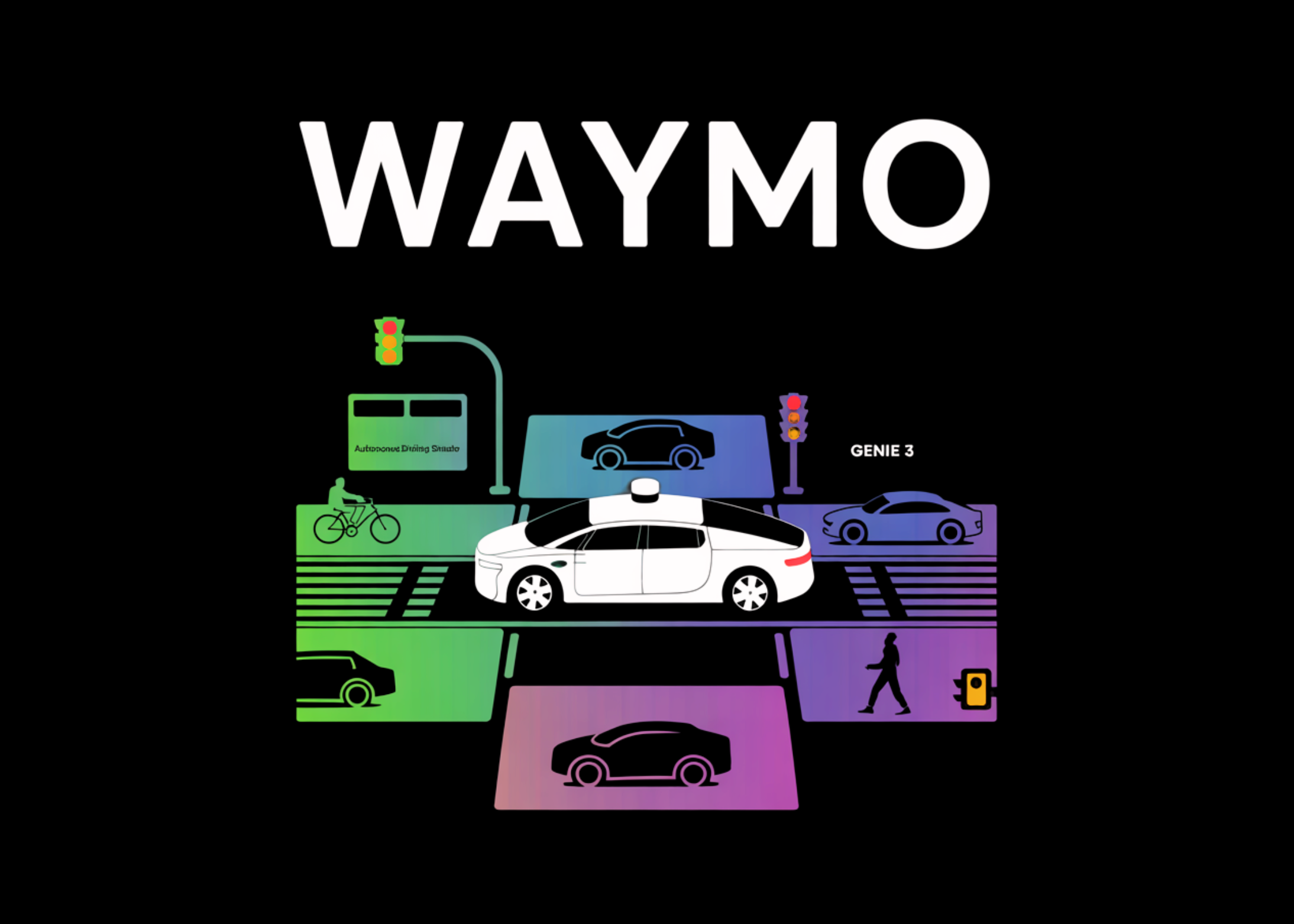

Waymo Introduces the Waymo World Model: A New Frontier Simulator Model for Autonomous Driving and Built on Top of Genie 3

1+ day, 3+ hour ago (636+ words) Genie 3 is a general-purpose world model that turns text prompts into interactive environments you can navigate in real time at roughly 24 frames per second, typically at 720p resolution. It learns the dynamics of scenes directly from large video corpora and supports…...

1+ day, 3+ hour ago (636+ words) Genie 3 is a general-purpose world model that turns text prompts into interactive environments you can navigate in real time at roughly 24 frames per second, typically at 720p resolution. It learns the dynamics of scenes directly from large video corpora and supports…...

How to Build Production-Grade Data Validation Pipelines Using Pandera, Typed Schemas, and Composable DataFrame Contracts

2+ day, 4+ hour ago (264+ words) We set up the execution environment by installing Pandera and its dependencies and importing all required libraries. We confirm library versions to ensure reproducibility and compatibility. It establishes a clean foundation for enforcing typed data validation throughout the tutorial. Check…...

2+ day, 4+ hour ago (264+ words) We set up the execution environment by installing Pandera and its dependencies and importing all required libraries. We confirm library versions to ensure reproducibility and compatibility. It establishes a clean foundation for enforcing typed data validation throughout the tutorial. Check…...

NVIDIA AI Release VibeTensor: An AI Generated Deep Learning Runtime Built End to End by Coding Agents Programmatically

2+ day, 18+ hour ago (440+ words) NVIDIA has released VIBETENSOR, an open-source research system software stack for deep learning. VIBETENSOR is generated by LLM-powered coding agents under high-level human guidance. The system asks a concrete question: can coding agents generate a coherent deep learning runtime that…...

2+ day, 18+ hour ago (440+ words) NVIDIA has released VIBETENSOR, an open-source research system software stack for deep learning. VIBETENSOR is generated by LLM-powered coding agents under high-level human guidance. The system asks a concrete question: can coding agents generate a coherent deep learning runtime that…...

How to Build Efficient Agentic Reasoning Systems by Dynamically Pruning Multiple Chain-of-Thought Paths Without Losing Accuracy

2+ day, 22+ hour ago (304+ words) We set up the Colab environment and load all required libraries for efficient agentic reasoning. We initialize a lightweight instruction-tuned language model with quantization to ensure stable execution on limited GPU resources. We also define global configuration, randomness control, and…...

2+ day, 22+ hour ago (304+ words) We set up the Colab environment and load all required libraries for efficient agentic reasoning. We initialize a lightweight instruction-tuned language model with quantization to ensure stable execution on limited GPU resources. We also define global configuration, randomness control, and…...

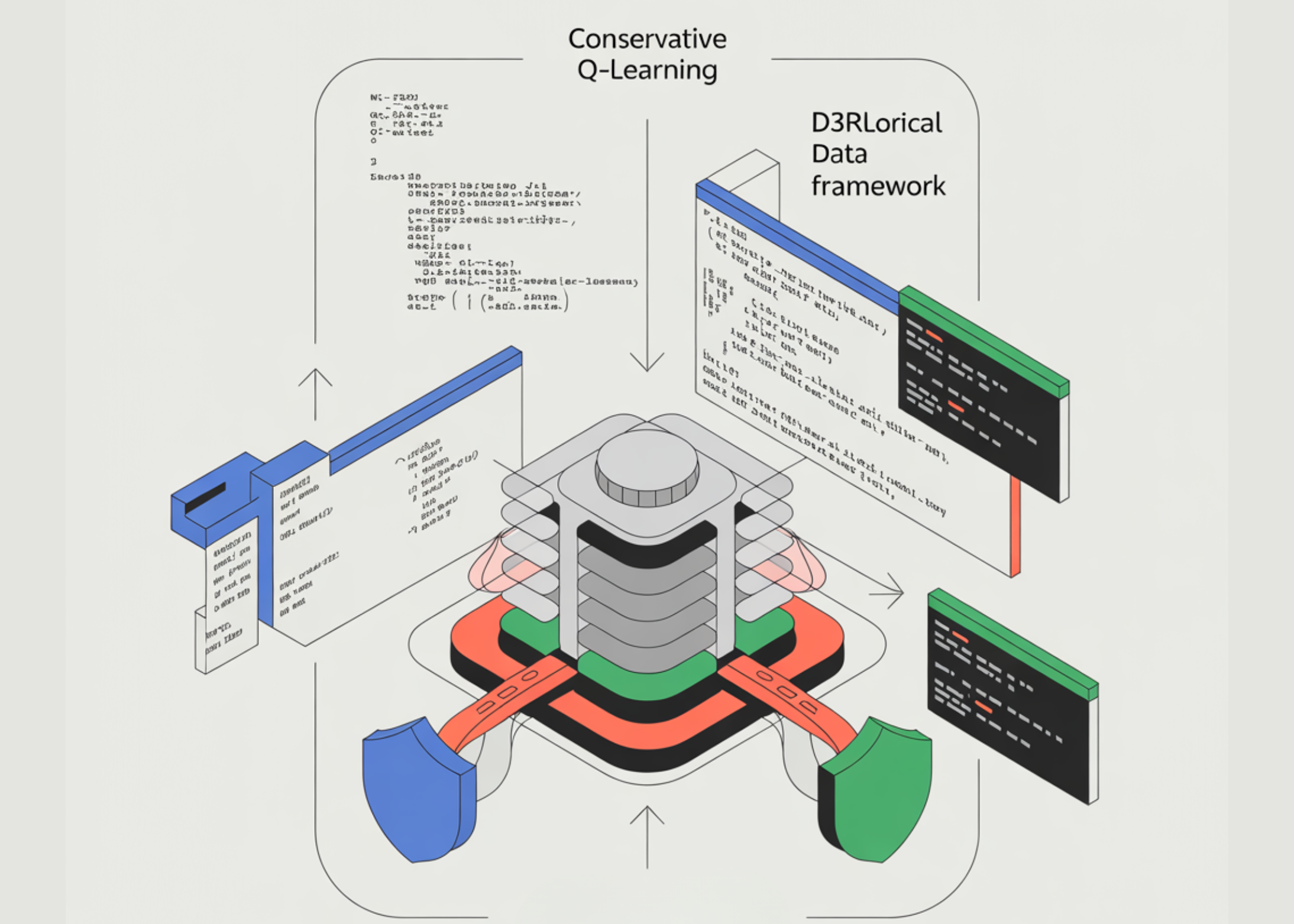

A Coding Implementation to Train Safety-Critical Reinforcement Learning Agents Offline Using Conservative Q-Learning with d3rlpy and Fixed Historical Data

3+ day, 17+ hour ago (268+ words) We set up the environment by installing dependencies, importing libraries, and fixing random seeds for reproducibility. We detect and configure the computation device to ensure consistent execution across systems. We also define a utility to create configuration objects safely across…...

3+ day, 17+ hour ago (268+ words) We set up the environment by installing dependencies, importing libraries, and fixing random seeds for reproducibility. We detect and configure the computation device to ensure consistent execution across systems. We also define a utility to create configuration objects safely across…...

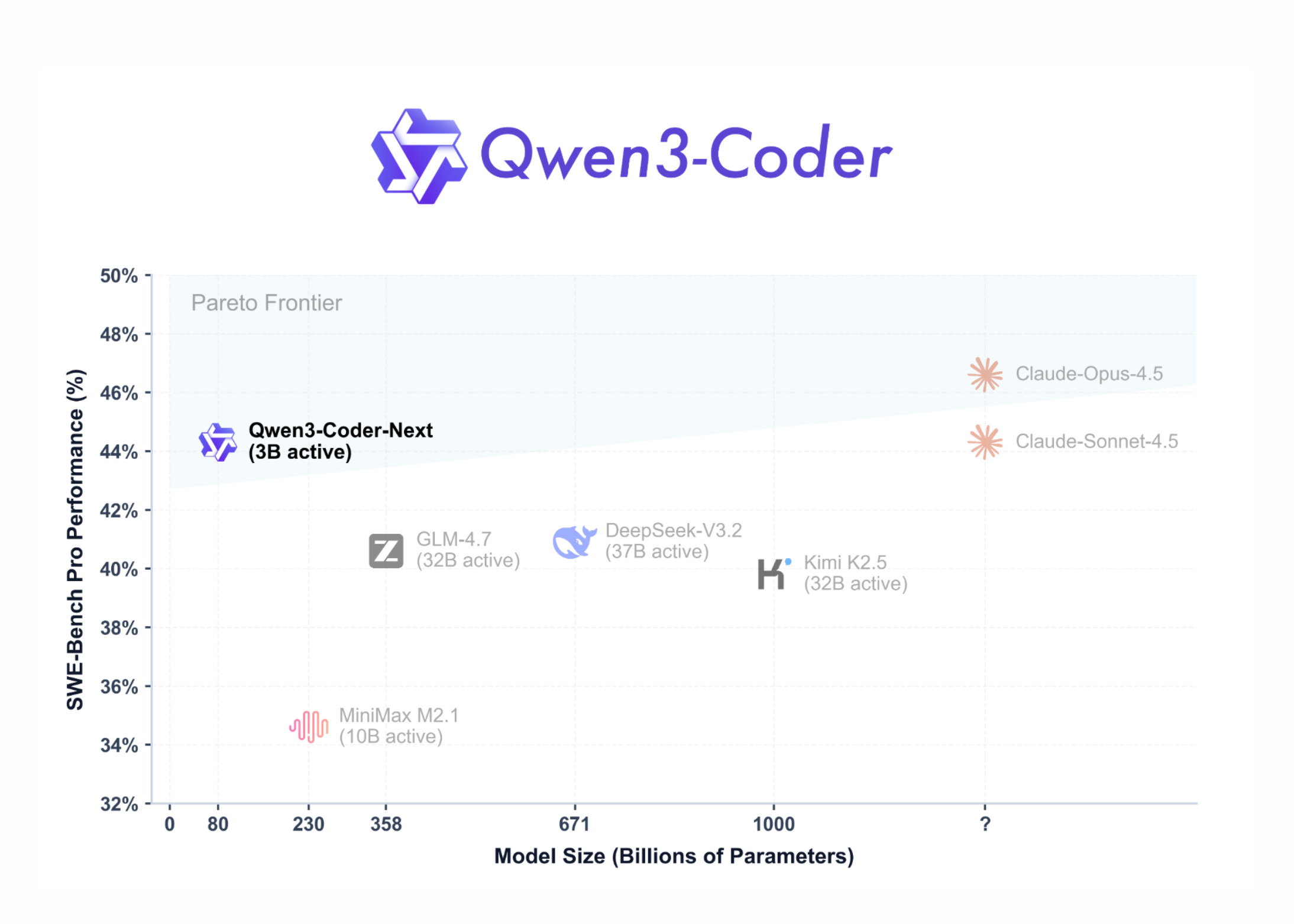

Qwen Team Releases Qwen3-Coder-Next: An Open-Weight Language Model Designed Specifically for Coding Agents and Local Development

4+ day, 1+ hour ago (469+ words) The model is positioned for agentic coding, browser-based tools, and IDE copilots rather than simple code completion. Qwen3-Coder-Next is trained with a large corpus of executable tasks and reinforcement learning so that it can plan, call tools, run code, and…...

4+ day, 1+ hour ago (469+ words) The model is positioned for agentic coding, browser-based tools, and IDE copilots rather than simple code completion. Qwen3-Coder-Next is trained with a large corpus of executable tasks and reinforcement learning so that it can plan, call tools, run code, and…...

The Statistical Cost of Zero Padding in Convolutional Neural Networks (CNNs)

5+ day, 3+ hour ago (643+ words) What is Zero Padding Zero padding is a technique used in convolutional neural networks where additional pixels with a value of zero are added around the borders of an image. This allows convolutional kernels to slide over edge pixels and…...

5+ day, 3+ hour ago (643+ words) What is Zero Padding Zero padding is a technique used in convolutional neural networks where additional pixels with a value of zero are added around the borders of an image. This allows convolutional kernels to slide over edge pixels and…...

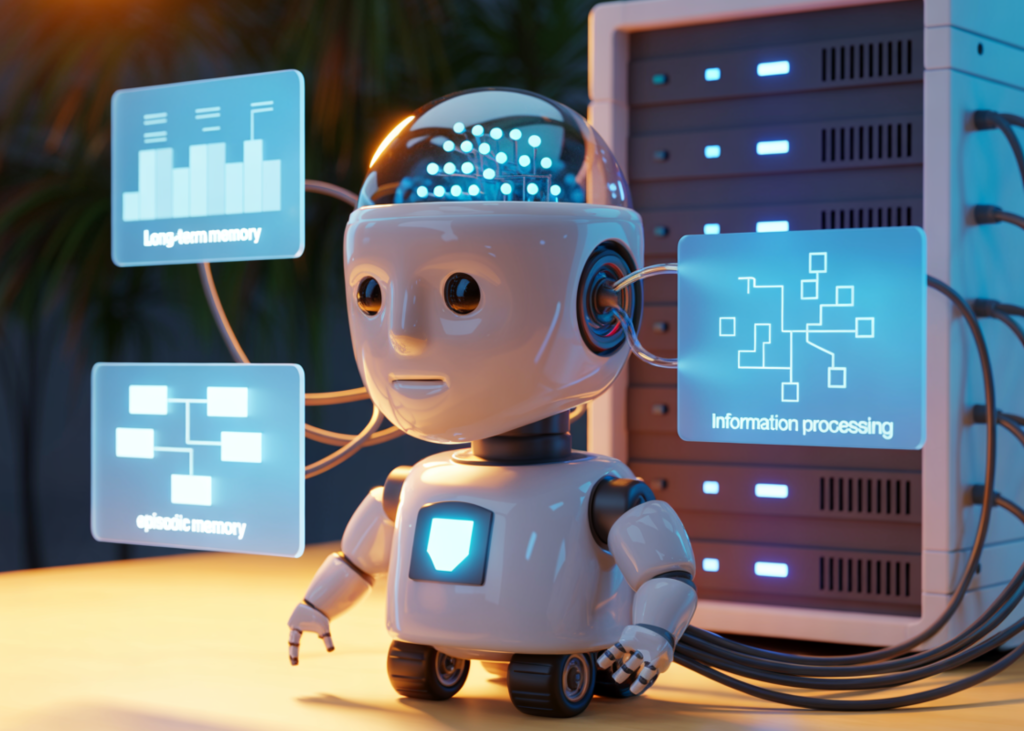

How to Build Memory-Driven AI Agents with Short-Term, Long-Term, and Episodic Memory

5+ day, 17+ hour ago (294+ words) We set up the execution environment and ensure all required libraries are available. We handle optional OpenAI integration while keeping the notebook fully runnable without any API keys. We establish the base imports and configuration that the rest of the…...

5+ day, 17+ hour ago (294+ words) We set up the execution environment and ensure all required libraries are available. We handle optional OpenAI integration while keeping the notebook fully runnable without any API keys. We establish the base imports and configuration that the rest of the…...

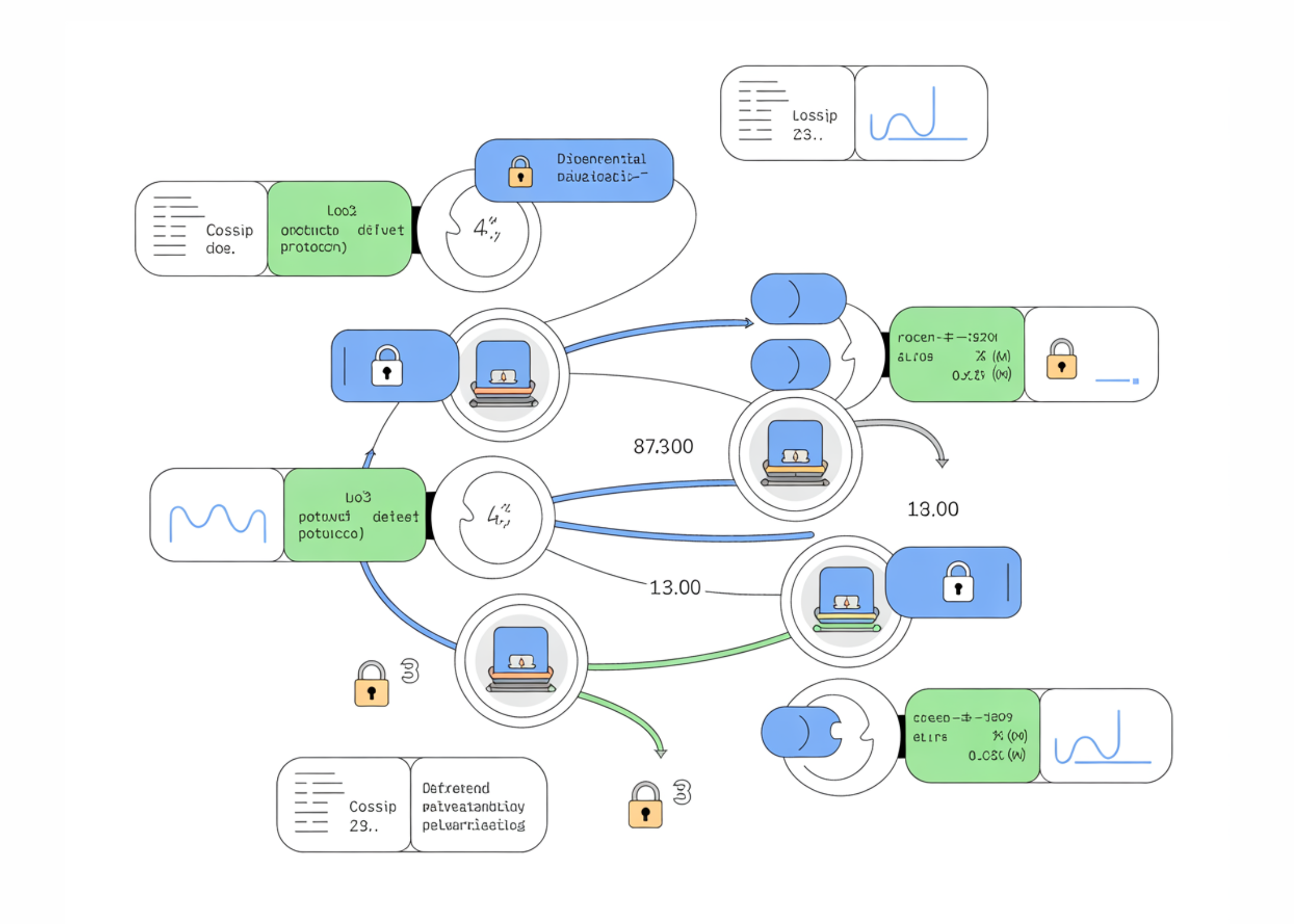

A Coding and Experimental Analysis of Decentralized Federated Learning with Gossip Protocols and Differential Privacy

5+ day, 21+ hour ago (315+ words) We set up the execution environment and installed all required dependencies. We initialize random seeds and device settings to maintain reproducibility across experiments. We also load the MNIST dataset, which serves as a lightweight yet effective benchmark for federated learning…...

5+ day, 21+ hour ago (315+ words) We set up the execution environment and installed all required dependencies. We initialize random seeds and device settings to maintain reproducibility across experiments. We also load the MNIST dataset, which serves as a lightweight yet effective benchmark for federated learning…...